Carta Healthcare delivers enterprise clinical data management solutions: an AI-powered platform, rooted in deep clinical expertise, that helps health systems unlock their enterprise data, integrate diverse sources with robust provenance, and optimize workflows to scale registry automation and patient care. Their mission tackles a persisting, quantifiable burden across hospitals and quality programs – administrative and documentation work that routinely consumes even 15+ hours per specialist per week – stealing time from patients and delaying decisions. By pairing modern AI with experienced clinical abstractors, Carta focuses on making abstraction faster, more accurate, and audit-ready in a domain where compliance and trust are non-negotiable.

By late 2023, Carta’s growth ambitions were running into the realities of an evolving stack. Several legacy components had accreted complexity. New customer deployments were slower than internal targets. The AI-assisted abstraction pipeline – built largely on classic NLP approaches rather than LLMs – needed tightening, but the pressing question was bigger: LLMs were advancing fast, and leadership needed to know if – and exactly how – they should be used in production. The risk was practical and immediate: the wrong choices would compound downstream, delaying registry work, consuming scarce abstractor time, pulling engineers into firefighting, or creating privacy exposure. Carta asked for an unflinching evaluation of its AI approaches, architecture, and team workflows – with a sharp focus on when LLMs add real value, how to use them safely, and how to enforce HIPAA-aligned boundaries from day one.

They chose datarabbit for rigor and pragmatism. In Q4 2023, we interviewed technical stakeholders and delivered a rapid, end-to-end evaluation of the stack, data flows, models, and tooling, along with a concise Technical Analysis and Recommendations, prioritized mitigations, and reference architecture/process diagrams designed for immediate, phased rollout. We stayed engaged through H1 2024 to guide the evolution – helping to sequence changes, validating choices, and keeping privacy and operability front and center.

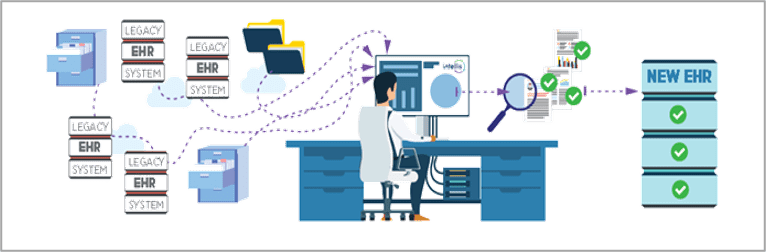

We mapped the as-is system – data sources, abstraction workflows, and downstream use – and identified the key stress points. The to-be design emphasized clear data layers, a simpler, service-oriented architecture, and an evidence-based LLM strategy that clarifies where LLMs add value over classic NLP – and where they do not. MLOps guardrails – for dataset/model versioning, evaluation, and promotion – helped build a reproducible path from experiment to auditable release. Given the regulated environment, we defined the controls required to use LLMs – whether self-hosted or third-party – while meeting privacy and security requirements.

Beyond architecture, we centered the work on how LLMs affect the product, especially in the case of Carta’s AI assistant for answers to registry questions. We helped to modify the underlying engine to utilize state-of-the-art technology, tighten retrieval and provenance so answers can be traced and trusted, recommended how to manage prompts, what production-ready tooling available is worth integrating into Carta scenarios. We also provided cost and scaling guidance – so product and engineering could plan features and budgets with clear trade-offs.

“Outcome was we revamped our entire approach to AI.”

– Andrew Crowder, VP Engineering (then Director of AI & Operations)

As changes rolled out, the newly LLM-enhanced systems produced more consistent answers with stronger provenance, and leadership reported “visibly better performance metrics” on generated outputs and abstracted forms. It also resulted in retiring some brittle subsystems, improving overall maintainability and shipping cadence – even though formal time-saved metrics weren’t tracked during the transition. Privacy and security remained guarded: both policies and actual configurations of the system ensured, that all the PHI stayed within approved controls, and were processed in a HIPAA-compliant fashion.

“All items were delivered quickly, with high quality and aligned to our needs. What stood out was their ability to quickly research and answer questions at a level that was easy to understand — and their honesty about what AI could and couldn’t handle.”

– Andrew Crowder

Asked what could have been improved, the answer was simply, “nothing.”

A 2025 financing round followed this engagement. Near-term gains included retiring brittle subsystems, improving maintainability and shipping cadence, strengthening provenance, and delivering higher-quality outputs across generated content and abstracted forms. With privacy and security enforced by design – keeping PHI within HIPAA-aligned controls – the team left with clear cost and scaling trade-offs, enabling AI-assisted workflows to evolve without parallel processes or compliance friction.

For business leaders, that means lower delivery risk, faster time-to-value, and a much more powerful product, powered by the latest technology and providing significant value. For technical leaders, a cleaner path from experiment to production across AI: defined data layers, reproducible evaluation and promotion, and operational practices that hold up under audit. If you’re deciding where AI (including LLMs) truly adds value – and how to utilize it with best practices, without costly errors along the way – book a discovery call. We’ll review your goals and architecture and map a privacy-first, cost-aware path to production.

.png)