Automatic call center using AWS Lex Bot, Amazon Connect and Amazon Bedrock Knowledge Base

Introduction

At datarabbit, from our interactions with customers, we understand well that businesses constantly seek effective ways to enhance efficiency, reduce costs, and elevate customer experience through automation. Have you thought about implementing an automated call center that not only optimizes your operations but also significantly improves customer satisfaction? Have you thought about implementing an automated call center that not only optimizes your operations but also significantly improves customer satisfaction?

Automating your call center can yield substantial business advantages:

- Reduced operational costs: Automation can lower call center expenses by 30% to 50% by optimizing staffing and resource allocation.

- Enhanced customer satisfaction: Companies report up to 20% improvements in customer satisfaction scores due to reduced wait times and consistent service quality.

- 24/7 availability: Providing around-the-clock customer support increases customer trust and brand loyalty, leading to higher retention rates.

With Amazon Connect combined with powerful AI tools like AWS Lex and Amazon Bedrock, establishing an intelligent, scalable call center is now more accessible than ever.

In this blog post, we'll walk you through the step-by-step process with real code examples and practical insights, from creating an AWS Bedrock Knowledge Base to integrating advanced conversational AI capabilities with Amazon Lex, covering everything you need to deliver seamless, natural, and effective customer interactions.

Motivation

But why automate your call center in the first place? While cost savings are certainly a motivation, the real game-changer lies in improving the customer journey. Automated systems can provide instant answers to common inquiries, minimize hold times, and ensure effortless transitions between virtual agents and human representatives. In fact, studies show that AI-powered chatbots and virtual assistants are now commonplace, handling up to 80% of routine customer inquiries without human intervention (reference)

Ready to dive in? Let’s explore how Amazon’s ecosystem can turn your call center vision into reality.

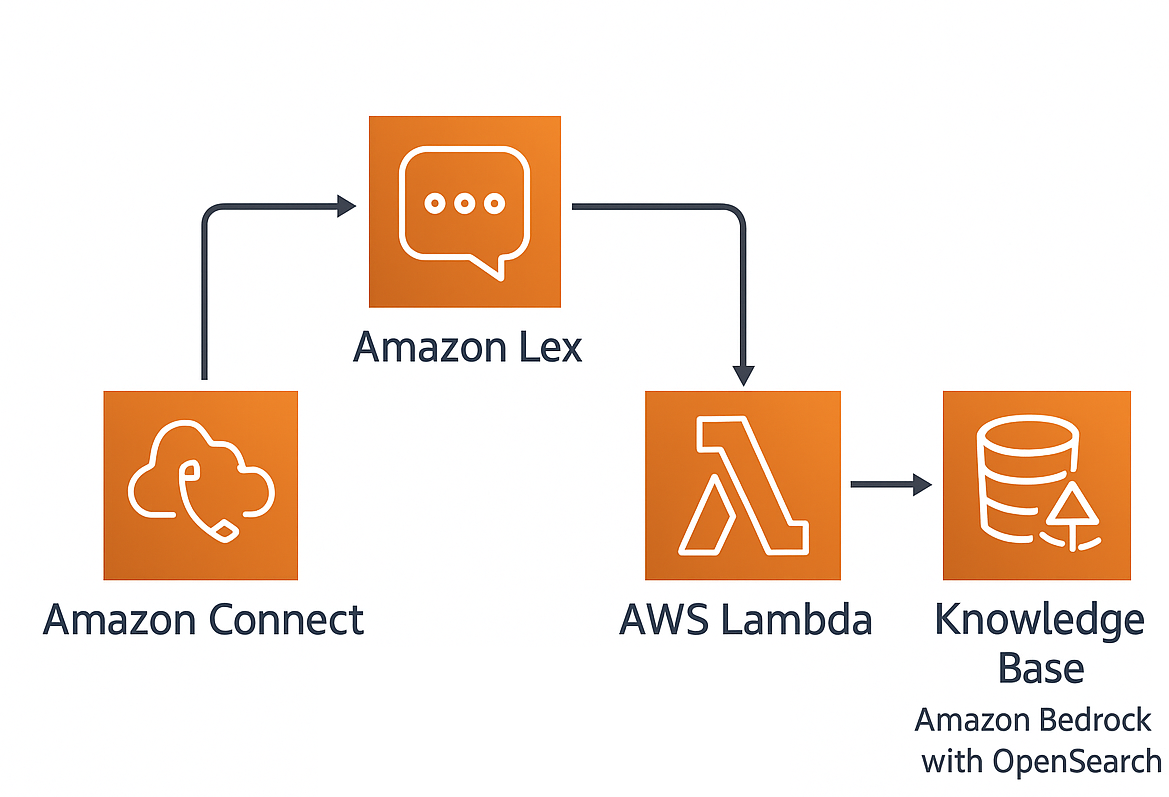

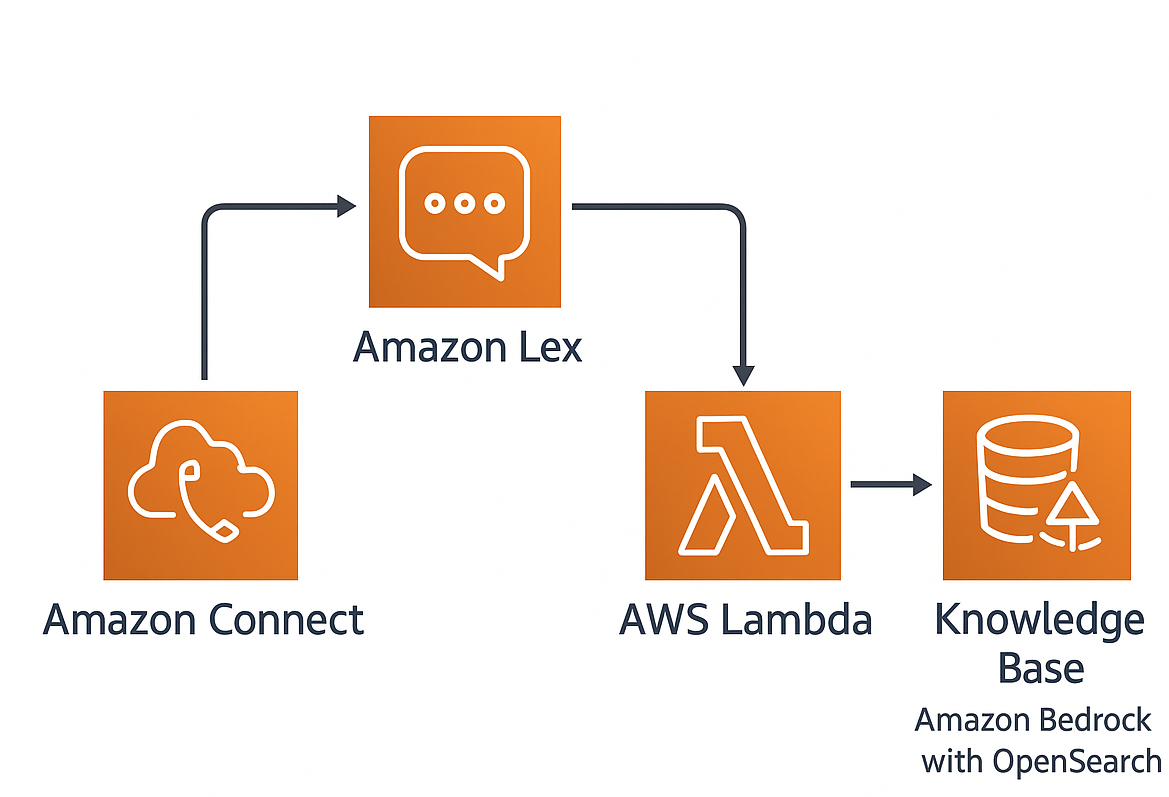

Architecture

To implement an automated call center, our solution leverages the following components:

- Amazon Connect: The backbone of your cloud-based call center.

- Amazon Lex: Powers conversational AI for handling customer interactions

- AWS Lambda: Executes business logic for processing and dynamic responses

- Knowledge Base: Provides accurate answers using Amazon Bedrock with OpenSearch

Now that we're done with the high-level explanations, let's get to setting it all up.

Setting up the automated Call Center

Step 0 - Setting up the docker image for Lex Lambda

The Lex Lambda function, responsible for processing Amazon Lex bot interactions, relies on a custom Docker image for flexibility and scalability. The script behind the lambda has several key components:

- A reusable prompt template for generating AI responses based on input data:

self.prompt_template = """

Human:

===Context===

You are a professional call center worker.

I will provide you a set of search results and the caller's question.

===Task===

Your task is to answer the caller's question, using only the information from the search results.

If the search results do not include the relevant information, you need to answer that you could not retrieve the answer to the caller's question.

Answer according to the requirements.

===Requirements===

Always answer in Polish.

Answer the question in a concise and straight-forward manner.

Always answer as a female.

Try not to repeat the same responses.

Only greet the caller once - at the beginning.

If the caller asks about their insurance: first ask about the policy number, than if the number is correct answer the question with all the relevant details.

Your answer should be relevant for the context (context is more important than the new search results)

===Caller's question===

The caller asked the following question: "{input}"

===Previous conversation context===

"{conversation_context}"

Assistant:

"""

This prompt ensures that the conversational AI behaves consistently and aligns with business rules. Since our client was from Poland, we had to include explicit instructions for the AI to speak in a particular way (feminine voice is the only one available)

- Knowledge Base interaction - A function that retrieves and generates responses from the Bedrock Knowledge Base:

def getAnswers(questions):

knowledgeBaseResponse = bedrock_client.retrieve_and_generate(

input={'text': questions},

retrieveAndGenerateConfiguration={

'knowledgeBaseConfiguration': {

'knowledgeBaseId': f"{KNOWLEDGE_BASE_ID}",

'modelArn': f"arn:aws:bedrock:{AWS_REGION}::foundation-model/{FOUNDATION_MODEL_ID}"

},

'type': 'KNOWLEDGE_BASE'

})

return knowledgeBaseResponseThis integration fetches relevant answers for user queries from the Bedrock Knowledge Base. As of June 2025 reranking can also be integrated to help achieve better results.

- Incorporation of the previous Lex bot’s response and maintaining the conversation context:

session_attributes['conversation_context'] += (

f"customer: {event['inputTranscript']}\nbot: {response_text}\n"

)This ensures the bot maintains a coherent and context-aware conversation with the user.

A Dockerfile ensures the Lambda function is built with all its dependencies and runs in an AWS-compatible environment.

After building the Docker image, the deployment involves the following steps:

1. Build the Docker Image:

docker build -t lex-lambda-handler .

2. Push to Amazon ECR:

docker tag lex-lambda-handler:latest <account_id>.dkr.ecr.<region>.amazonaws.com/lex-lambda-handler:latest

docker push <account_id>.dkr.ecr.<region>.amazonaws.com/lex-lambda-handler:latest3. Update the CloudFormation Template:

In the template.yaml, specify the image URI:

AWSLambdaFunction:

Type: AWS::Lambda::Function

Properties:

Code:

ImageUri: <account_id>.dkr.ecr.<region>.amazonaws.com/lex-lambda-handler:latest4. Deploy the CloudFormation Stack: Use the AWS CLI or Management Console to create the stack and provision the Lambda function.

Step 1 - Define your infrastructure - Knowledge Base

AWS Bedrock is the newest and most prone to changes component. Because of that we've decided to utilise Clouformation for deployment of this app. Our 'template.yaml' file defines the core infrastructure required for this solution. Here’s what it does:

- Parameters: Captures inputs like S3 bucket names, model IDs, and resource prefixes for customization.

- IAM Roles and Policies: Configures permissions for accessing services like Bedrock, S3, and OpenSearch.

- OpenSearch Collection and Knowledge Base Creation: Creates a vector-based index and knowledge base using OpenSearch and AWS Bedrock for storing and retrieving data efficiently.

- Lambda Functions: Deploys serverless functions to process requests and integrate with other services.

First, before any resources are created the following roles have to be defined:

- KnowledgeBaseRole - needs to have access to listing Bedrock Models as well as Invoking specific ones, access to OpenSearchServerlessAPI for communicating with collections and access to the S3 bucket where the files for the knowledge base are stored.

KnowledgeBaseRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- bedrock.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: BedrockListAndInvokeModel

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- bedrock:ListCustomModels

Resource: '*'

- Effect: Allow

Action:

- bedrock:InvokeModel

Resource:

- !Sub arn:aws:bedrock:${AWS::Region}::foundation-model/${ModelID}

- !Sub arn:aws:bedrock:${AWS::Region}::foundation-model/${EmbeddingModel}

- PolicyName: OSSPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- aoss:APIAccessAll

Resource: !Sub arn:aws:aoss:${AWS::Region}:${AWS::AccountId}:collection/*

Sid: OpenSearchServerlessAPIAccessAllStatement

- PolicyName: S3ReadOnlyAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:Get*

- s3:List*

- s3-object-lambda:Get*

- s3-object-lambda:List*

Resource:

- !Sub arn:aws:s3:::${S3BucketName}

- !Sub arn:aws:s3:::${S3BucketName}/*

- OpenSearchIndexLambdaRole - the role needs to be able to create logs, access OpenSearch and perform actions regarding the index.

OpenSearchIndexLambdaRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: LambdaBasicExecution

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'logs:CreateLogGroup'

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: 'arn:aws:logs:*:*:*'

- PolicyName: AllowOpenSearchActions

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- aoss:CreateIndex

- aoss:DescribeIndex

- aoss:UpdateIndex

- aoss:BatchWriteDocument

Resource: !Sub "arn:aws:aoss:${AWS::Region}:${AWS::AccountId}:collection/${ResourcePrefix}-collection"

- EncryptionPolicy, NetworkPolicy and DataAccessPolicy - as stated in the article and OpenSearch Serverless collections needs an encryption or network policy to exist. Network policies specify access to a collection and its OpenSearch Dashboards endpoint from public networks or specific VPC endpoints. For more information, see Network access for Amazon OpenSearch Serverless. Encryption policies specify a KMS encryption key to assign to particular collections. For more information, see Encryption at rest for Amazon OpenSearch Serverless. As for the DataAccessPolicy it decides which roles are permitted to access the index of the collection.

EncryptionPolicy:

Type: AWS::OpenSearchServerless::SecurityPolicy

Properties:

Name: !Sub ${ResourcePrefix}-encryption-policy

Type: encryption

Description: Encryption policy.

Policy: !Sub >-

{"Rules":[{"ResourceType":"collection","Resource":["collection/${ResourcePrefix}-collection"]}],"AWSOwnedKey":true}

NetworkPolicy:

Type: 'AWS::OpenSearchServerless::SecurityPolicy'

Properties:

Name: !Sub ${ResourcePrefix}-network-policy

Type: network

Description: Network policy.

Policy: !Sub >-

[{"Rules":[{"ResourceType":"collection","Resource":["collection/${ResourcePrefix}-collection"]}, {"ResourceType":"dashboard","Resource":["collection/${ResourcePrefix}-collection"]}],"AllowFromPublic":true}]

DataAccessPolicy:

Type: 'AWS::OpenSearchServerless::AccessPolicy'

DependsOn:

- KnowledgeBaseRole

- OpenSearchIndexLambdaRole

Properties:

Name: !Sub ${ResourcePrefix}-data-access-policy

Type: data

Policy: !Sub >-

[{"Rules":

[

{

"ResourceType":"index",

"Resource":["index/*/*"],

"Permission":["aoss:*"]

},

{

"ResourceType":"collection",

"Resource":["collection/${ResourcePrefix}-collection"],

"Permission":["aoss:*"]

}

],

"Principal":[

"${KnowledgeBaseRole.Arn}",

"${OpenSearchIndexLambdaRole.Arn}"

]

}]

Then the OpenSearchCollection needs to be created with the relevant permissions (EncryptionPolicy, NetworkPolicy, DataAccessPolicy) a lambda creating the index based on the guidelines with the OpenSearchIndexLambdaRole, and finally the knowledge base and its data source are configured:

BedrockKnowledgeBase:

Type: AWS::Bedrock::KnowledgeBase

DependsOn:

- OpenSearchCollection

- KnowledgeBaseRole

- CreateOpenSearchIndex

Properties:

KnowledgeBaseConfiguration:

Type: "VECTOR"

VectorKnowledgeBaseConfiguration:

EmbeddingModelArn: !Sub "arn:${AWS::Partition}:bedrock:${AWS::Region}::foundation-model/${EmbeddingModel}"

RoleArn: !GetAtt KnowledgeBaseRole.Arn

Name: CustomSupportKnowledgeBase

StorageConfiguration:

Type: OPENSEARCH_SERVERLESS

OpensearchServerlessConfiguration:

CollectionArn: !Sub "arn:aws:aoss:${AWS::Region}:${AWS::AccountId}:collection/${OpenSearchCollection}"

VectorIndexName: !Sub ${ResourcePrefix}-index

FieldMapping:

VectorField: "bedrock-knowledge-base-default-vector"

TextField: "AMAZON_BEDROCK_TEXT"

MetadataField: "AMAZON_BEDROCK_METADATA"

KnowledgeBaseDataSource:

Type: AWS::Bedrock::DataSource

DependsOn:

- BedrockKnowledgeBase

Properties:

DataSourceConfiguration:

Type: S3

S3Configuration:

BucketArn: !Sub arn:aws:s3:::${S3BucketName}

KnowledgeBaseId: !Ref BedrockKnowledgeBase

Name: !Sub "${ResourcePrefix}-S3DataSource"

Step 2 - Define your infrastructure - Amazon Lex Bot

The next step in setting up the automated call center is defining your Amazon Lex Bot, which will serve as the conversational interface for interacting with customers. Amazon Lex integrates seamlessly with other AWS services like Lambda and Bedrock, making it a powerful tool for creating a natural, conversational experience.

Below, we walk through the configuration of the Amazon Lex Bot and its dependencies.

- The Lambda function is central to handling the conversational logic of the Lex Bot. This function communicates with the Bedrock Knowledge Base and other AWS services to fetch relevant information and process user requests.

AWSLambdaFunction:

Type: AWS::Lambda::Function

DependsOn:

- AWSLambdaRole

- BedrockKnowledgeBase

Properties:

PackageType: Image

Role: !GetAtt AWSLambdaRole.Arn

Code:

ImageUri: !Ref 'AmazonECRImageUri'

Architectures:

- x86_64

MemorySize: 1024

Timeout: 300

Environment:

Variables:

LOGGING_LEVEL: !Ref LoggingLevel

MODEL_ID: !Ref ModelID

REGION_NAME: !Ref AWS::Region

KNOWLEDGE_BASE_ID: !GetAtt BedrockKnowledgeBase.KnowledgeBaseId

- The Amazon Lex Bot is defined next. It specifies the conversational behavior, including intents, sample utterances, and integration with the Lambda function for processing requests. The main features of the bot are the intent definitions. In this simple demo we've defined two. There is the fallback intent, which captures any input that does not match predefined intents, ensuring a graceful response for unexpected queries. Then the custom intent, that handles specific customer queries with sample utterances. We also define query parameters, such as manifest, for further customization.

A Lex Bot needs a version and alias for deployment. These components allow you to manage and deploy multiple versions of the bot seamlessly. Our configuration ensures that the bot is tied to a specific version and the alias points to this deployed version, enabling dynamic updates without affecting the production bot.

AmazonLexBot:

DependsOn:

- AWSLambdaFunction

- BotRuntimeRole

Type: AWS::Lex::Bot

Properties:

Name: !Ref AmazonLexBotName

RoleArn: !GetAtt BotRuntimeRole.Arn

DataPrivacy:

ChildDirected: false

IdleSessionTTLInSeconds: 300

Description: "Amazon Lex Bot integrated with Bedrock LLM"

AutoBuildBotLocales: false

BotLocales:

- LocaleId: "pl_PL"

Description: "Amazon Lex Bot integrated with Bedrock LLM"

NluConfidenceThreshold: 0.40

VoiceSettings:

VoiceId: "Ola"

SlotTypes:

- Name: "Query"

Description: "Slot Type description"

SlotTypeValues:

- SampleValue:

Value: manifest

ValueSelectionSetting:

ResolutionStrategy: ORIGINAL_VALUE

Intents:

- Name: "FallbackIntent"

Description: "Default intent when no other intent matches"

ParentIntentSignature: "AMAZON.FallbackIntent"

DialogCodeHook:

Enabled: true

FulfillmentCodeHook:

Enabled: true

- Name: "CustomIntent"

Description: "Intent for handling custom queries"

SampleUtterances:

- Utterance: "Dzień dobry"

- Utterance: "pomocy"

- Utterance: "Ile"

- Utterance: "Kiedy"

- Utterance: "Jak"

DialogCodeHook:

Enabled: true

FulfillmentCodeHook:

Enabled: true

AmazonLexBotVersion:

Type: AWS::Lex::BotVersion

Properties:

BotId: !Ref AmazonLexBot

BotVersionLocaleSpecification:

- LocaleId: pl_PL

BotVersionLocaleDetails:

SourceBotVersion: DRAFT

Description: AmazonLexBot Version

AmazonLexBotAlias:

Type: AWS::Lex::BotAlias

Properties:

BotId: !Ref AmazonLexBot

BotAliasName: !Ref AmazonLexBotAliasName

BotAliasLocaleSettings:

- LocaleId: pl_PL

BotAliasLocaleSetting:

Enabled: true

CodeHookSpecification:

LambdaCodeHook:

CodeHookInterfaceVersion: "1.0"

LambdaArn: !GetAtt AWSLambdaFunction.Arn

BotVersion: !GetAtt AmazonLexBotVersion.BotVersionAnd just like that, with these components in place, your Amazon Lex Bot is fully integrated with the Bedrock Knowledge Base and Lambda functions. This architecture provides a robust framework for handling conversational interactions in your automated call center.

Step 3 - Define your infrastructure - Amazon Connect

With the Amazon Lex Bot and Bedrock Knowledge Base set up, the next step is to integrate Amazon Connect. Amazon Connect serves as the backbone of the automated call center, providing cloud-based telephony services and integration with Amazon Lex for conversational interactions. Here's how to configure and integrate Amazon Connect with your solution.

- The Amazon Connect Instance is the foundational component of the call center, enabling telephony and routing features. Below is the CloudFormation configuration for creating an Amazon Connect instance. This instance enables inbound and outbound calls, activates features such as Contact Flow Logs and Contact Lens for analytics, uses CONNECT_MANAGED identity management, simplifying user and permissions management within the Connect environment. As for the commented out part, if you want to associate a toll-free or geographic phone number with your Amazon Connect instance, you can do it with the configuration below, but beware that it sometimes throws an error - it is a well-known AWS bug.

AmazonConnectInstance:

Type: "AWS::Connect::Instance"

Properties:

Attributes:

AutoResolveBestVoices: true

ContactflowLogs: true

ContactLens: true

EarlyMedia: true

InboundCalls: true

OutboundCalls: true

IdentityManagementType: CONNECT_MANAGED

InstanceAlias: !Ref 'AmazonConnectName'

#AmazonConnectPhoneNumber:

# Type: "AWS::Connect::PhoneNumber"

# Properties:

# TargetArn: !GetAtt AmazonConnectInstance.Arn

# Description: "An example phone number"

# Type: "TOLL_FREE"

# CountryCode: PL

# Tags:

# - Key: testkey

# Value: testValue

IntegrationAssociation:

Type: AWS::Connect::IntegrationAssociation

Properties:

InstanceId: !GetAtt AmazonConnectInstance.Arn

IntegrationType: LEX_BOT

IntegrationArn: !GetAtt AmazonLexBotAlias.Arn

InvokeFunctionPermission:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt AWSLambdaFunction.Arn

Action: lambda:InvokeFunction

Principal: lexv2.amazonaws.com

SourceAccount: !Ref 'AWS::AccountId'

SourceArn: !GetAtt AmazonLexBotAlias.Arn

Step 4 - Deploying the infrastructure

With the template.yaml file ready, using a bash script initialize the CloudFormation stack, provisioning all the necessary resources, and wait for the stack to reach the CREATE_COMPLETE status.

Now that the Knowledge Base is created, the user needs to manually sync it - it can be done with a cron job, but that's a topic for another article, on how to populate and sync the KB.

Using the final solution

Once the automated call center is fully set up, using it is straightforward for both customers and support teams. Here’s how the process works:

- Customer Interaction: Customers dial the phone number associated with the Amazon Connect instance. Depending on your setup, you may have provided a toll-free or geographic number for easy accessibility.

- AI-Driven Assistance: As the call connects, Amazon Connect routes the interaction to Amazon Lex, which acts as the virtual agent. Customers can ask questions or describe their issues in natural language, and Lex processes these inputs to provide a suitable response.

- Knowledge Base Integration: For complex queries requiring detailed information, the Lex bot queries the Bedrock Knowledge Base via the Lambda function. The Knowledge Base, leveraging OpenSearch, fetches and ranks the most relevant information, ensuring accurate and context-aware responses.

- Seamless Escalation: If the virtual agent cannot resolve the issue, the system smoothly transfers the call to a human agent through Amazon Connect. The agent receives a summary of the conversation so far, ensuring no context is lost and enabling faster resolution.

- 24/7 Support: The system runs round the clock, ensuring that customers can get answers to their inquiries or initiate support requests at any time. This reduces wait times and enhances the overall customer experience.

- Feedback and Logs: Every interaction is logged, enabling you to gather insights into customer needs, improve the AI models, and refine the system for better performance. Contact Lens for Amazon Connect provides analytics and sentiment analysis, giving a deeper understanding of customer satisfaction.

With this automated solution, you deliver efficient, consistent, and scalable customer support, all while reducing operational costs.

Caveats and Challenges

While the automated call center powered by AWS tools provides significant benefits, there are some caveats and challenges we encountered during development and deployment:

1. Language Limitations

- Due to stakeholders in the solution being Polish-based company, supporting the Polish had to be prioritized. Unfortunately, many AI-powered tools and pre-trained models have limited or no native support for Polish. For example, Amazon Lex has a restricted set of voices and language options, and Polish support is not as mature as English.

- To address this, we crafted specific prompt instructions for the AI to ensure proper responses and tailored the Lex bot to provide a consistent experience, though this required additional manual effort.

2. Knowledge Base Synchronization

- Populating and synchronizing the Bedrock Knowledge Base was not as straightforward as expected. While the setup is robust, the process of keeping the Knowledge Base up-to-date with relevant and accurate data can be challenging. This requires custom workflows or cron jobs to ensure real-time updates, which AWS does not yet natively support.

3. Phone Number Claiming Issues

- The process of associating a phone number with the Amazon Connect instance occasionally led to errors, such as reaching the limit of phone numbers. This is a known AWS bug that can complicate deployment and require manual intervention or support from AWS to resolve.

4. Customization and Tuning

- Every component, from the Knowledge Base to the Lex bot, required significant customization to meet our specific requirements. Balancing out-of-the-box functionality with custom business rules, especially in non-standard use cases like handling insurance-related queries, was time-intensive.

5. Cost Management

- While AWS services like Amazon Connect, Bedrock, and Lambda offer pay-as-you-go pricing, costs can add up quickly, especially when dealing with high call volumes or complex AI interactions. Monitoring and optimizing these costs require careful planning and regular audits.

6. Training and Maintenance

- Maintaining contextually aware and coherent AI responses, especially with a language-specific focus, requires continuous testing and updating of AI prompts and logic. Training the Lex bot to handle edge cases and understanding Polish idiomatic expressions presented a learning curve.

By addressing these challenges with careful planning and leveraging AWS’s powerful ecosystem, we were able to build a robust automated call center that meets the needs of our clients. Despite the obstacles, the result is a highly scalable, intelligent system that enhances customer satisfaction while optimizing operational efficiency.

Summary

Implementing an automated call center with AWS technologies may be a strategic step toward modernizing customer support infrastructure. Utilizing it, you can create a powerful and scalable solution that provides immense value with: Continuous, reliable support: Ensuring customers receive immediate responses regardless of the time or day, greatly enhancing customer satisfaction and loyalty. Scalable and flexible solution: AWS ecosystem's scalability accommodates growing business demands effortlessly, supporting future expansions seamlessly. Efficient escalation to human agents: Recognizing that not all customers prefer automated responses, the system easily escalates conversations to human representatives whenever questions are complex or customers explicitly request human assistance. Our recent project demonstrated that even with language-specific challenges, such as adapting the system to the Polish language, careful prompt design and integration with a dynamic knowledge base ensured consistent, natural, and helpful customer interactions. If your organization seeks similar efficiency gains and enhanced customer satisfaction, leveraging AWS's robust tools is a proven, forward-thinking choice.

Ready to Transform Your Customer Service?

datarabbit is here to help you leverage technology for comprehensive customer service transformation. Whether it's automated call centers, intelligent chatbots and assistants, dynamic knowledge bases, or integrated support automation, our tailored solutions can empower your organization to deliver exceptional customer experiences. Contact us today for a personalized consultation and start your journey towards seamless, efficient, and future-ready customer support!